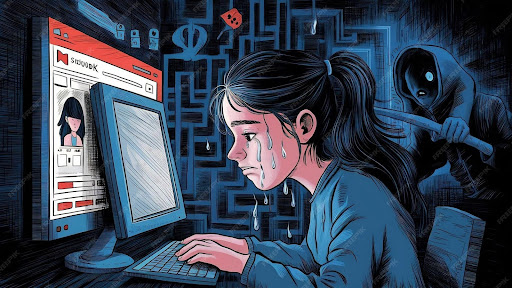

Space is changing at light speed, and with it, opportunities and challenges are emerging. Among the most important threats to this age are so-called deepfakes threats – computer-generated video, pictures, and sounds that can be manipulated to convincingly mimic real people. Although AI tools in deepfakes can be creatively applied in movies, commercials, or textbooks, it is increasingly being used to orchestrate fraud, forgery, and cyberattacks.

The Emergence of Deepfake Threats

Deepfakes employ advanced artificial intelligence techniques in the guise of machine learning and generative adversarial networks (GANs) to produce natural but artificial content. The newer threats have employed this technology to impersonate CEOs’ video conferencing appearances, listen in on voice recordings to sign off on wire transfers, and even produce deepfake news on social media platforms.

To a human, the risk is not any lesser. Deepfakes are now impersonation tests for phony job interviews and even scripted videos to sabotage someone’s reputation. With AI tools technology going wild day after day, it’s becoming more and more difficult to identify a deepfake threats from the outside.

Why Deepfakes Are Dangerous in Fraud

The biggest concern regarding deepfakes is that they are able to build trust. The human mind employs visual and hearing cues subconsciously to authenticate authenticity. When the video looks authentic and the voice sounds authentic, people do not challenge it. People use such beliefs to earn money, confidential information, or business strategies.

And further, time-worn security measures such as passwords and two-step verification are not a match for this type of advanced impersonation. The Deepfake threat puts individuals and companies at the mercy of a new generation of internet scams. Read more here about – Ai site builders are picking up speed what marketers should know.

AI Tools Against AI Fraud

And as fate would have it, AI is also the answer to counterattack. Future technology with AI tools is being developed to detect the subtle footprints of deepfakes. The scanners identify anomalies such as odd blinking, inhumanly constricted facial movements, off-beat lighting, or odd voice modulation that can lie below human detection.

Companies are also making investments in AI-driven fraud protection systems that monitor communications, transactions, and web activity for any indication of tampering continuously. With the integration of machine learning in cybersecurity measures, companies can detect and deter fraudulent attempts without incurring any actual loss.

How Businesses Can Protect Themselves

In order to shield themselves from this new generation of cyber deceptions, companies must have a multi-layer security system. This includes:

- Employee Education: Training employees on how to remain vigilant and detect fraudulent emails.

- AI-Driven Verification: Applying biometric authentication and AI-powered detection tools to verify identities in online transactions.

- Real-Time Vigilance: Using machine learning to catch fraud as it happens by tracking suspicious activity in real time.

- Policy Reforms: Updating compliance and cybersecurity rules to deal with new threats like deepfake attacks.

The Future of Digital Trust

As deepfake technology grows more advanced, it will become harder to tell what’s real and what’s fake in daily life. But with security software based on AI tools and defensive strategies, individuals and businesses can inoculate themselves from becoming victims of sophisticated scams.

Deepfakes could be the next generation of cyber lies with emerging deepfake threats, but with intelligence, imagination, and good security measures, the danger can be controlled. Who wins the AI vs AI war will be decided based on how rapidly we evolve.